Ars Technica

DuckDuckGo announced on Wednesday that it should not be left out of the rush to incorporate generative AI into search Duck Assist, an AI-powered factual summary service powered by technology from Anthropic and OpenAI. It’s freely available today as a broad beta test for users of DuckDuckGo browser extensions and browsing apps. Being powered by an AI model, the company admits that DuckAssist may make things happen but hopes that will happen rarely.

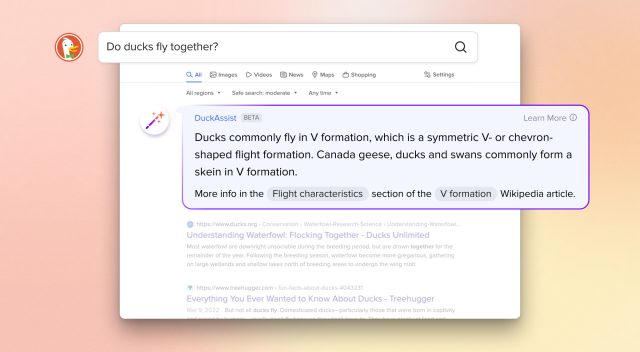

Here’s how it works: If a DuckDuckGo user searches for a question that can be answered by Wikipedia, DuckAssist may appear and use natural language AI technology to generate a brief summary of what it finds on Wikipedia, with source links listed below. The summary appears above the normal search results for DuckDuckGo in a special box.

The company positions DuckAssist as a new form of “instant answers” — a feature that prevents users from rummaging through web search results to find quick information on topics like news, maps, and weather. Instead, the search engine delivers instant answer results on top of the usual list of websites.

DuckDuckGo

DuckDuckGo does not mention the Large Language Model (LLM) or models it uses to create DuckAssist, although there may be some form of OpenAI API. Ars Technica has reached out to DuckDuckGo representatives for clarification. But Gabriel Weinberg, CEO of DuckDuckGo, explains how to use the sources in the Company blog post:

DuckAssist answers questions by scanning a select set of sources – at the moment this is usually Wikipedia, and sometimes related sites like Britannica – using DuckDuckGo’s active indexing. Since we use natural language technology from OpenAI and Anthropic to summarize what we find on Wikipedia, these answers should respond more directly to your actual question than traditional search results or other instant answers.

Since DuckDuckGo’s main selling point is privacy, the company says DuckAssist is “anonymous” and maintains that it doesn’t share your search or browsing history with anyone. “We also keep your search and browsing history anonymous to our search content partners,” Weinberg wrote, “in this case OpenAI and Anthropic, used to abstract Wikipedia sentences that we identify.” “

If DuckDuckGo uses OpenAI’s GPT-3 or ChatGPT API, one might worry that the site might send every user’s query to OpenAI every time it’s called. But reading between the lines, it appears that only the Wikipedia article (or an excerpt from it) is being sent to OpenAI for abstracting, not the user search itself. We’ve reached out to DuckDuckGo to make this point, too.

DuckDuckGo describes DuckAssist as “the first in a series of AI-powered features we hope to roll out in the coming months.” If the launch goes well — and nobody breaks it with hostile claims — DuckDuckGo plans to roll out the feature to all search users “in the coming weeks.”

DuckDuckGo: Risk of hallucinations ‘significantly diminished’

As we covered earlier in Ars, LLMs tend to produce disguised false results, which AI researchers call “hallucinations.” term of art in the field of artificial intelligence. Hallucinations can be difficult to detect unless you know the material being referenced, and they happen in part because OpenAI’s GPT-style LLMs don’t distinguish between fact and fiction in their datasets. In addition, models can make erroneous conclusions based on otherwise accurate data.

On this point, DuckDuckGo hopes to avoid hallucinations by relying heavily on Wikipedia as a source: “By asking DuckAssist to only summarize information from Wikipedia and related sources,” Weinberg He writes“The possibility of him ‘hallucinating’—that is, making something up—is greatly diminished.”

While relying on a high-quality source of information may reduce errors from misinformation in the AI dataset, it may not reduce false inferences. And DuckDuckGo puts the burden of fact-checking on the user, providing a source link below the AI-generated score that can be used to check its accuracy. But it won’t be perfect, and CEO Weinberg concedes: “However, DuckAssist won’t give exact answers all the time. We fully expect it to make mistakes.”

As more companies deploy LLM technology that can easily deliver disinformation, it may take some time and its widespread use before companies and customers decide what level of hallucination is tolerable in an AI-powered product designed to inform people realistically.

More Stories

How Google’s New Gemini Gems AI Experts Can Boost SEO

Leaks about PS5 Pro announcement plans and device design

Castlevania Dominus Collection Physical Release Confirmed, Pre-Orders Open Next Month